The Scam

I n July 2022, Kerala witnessed its first reported deepfake fraud case. PS Radhakrishnan, a 73-year-old retired central government employee of Coal India, fell victim to an elaborate scam and lost Rs. 40,000. The scammer used artificial

intelligence (AI)-generated deepfake technology to impersonate his former colleague, Venu Kumar, and trick him into transferring money.

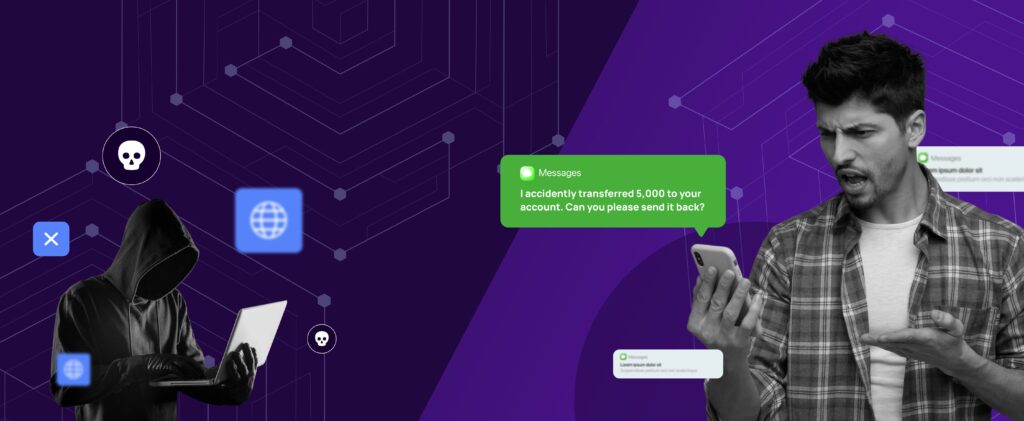

It started with a WhatsApp message from an unknown number. The scammer claimed to be Venu Kumar and had a profile picture matching the real person. The conversation seemed natural since he asked about Radhakrishnan’s family, work, and their mutual colleagues. Over time, the scammer built credibility by sharing personal details that only a genuine acquaintance would know.

Then came the request for money. The scammer claimed to be at Dubai airport, preparing to fly to India, but was in an urgent need for cash. His sister-in-law required emergency surgery in Mumbai, and an advance payment of Rs. 40,000 had to be made immediately.

Radhakrishnan was cautious and sceptical. He expressed concerns about scams and online fraud. The scammer, sensing hesitation, offered to get on a video call immediately. When Radhakrishnan answered, he saw a familiar face. It was Venu Kumar! He even had the same voice. The lips and eyes moved naturally, matching the words being spoken.

The call lasted only 25 seconds before disconnecting. However, that short moment was enough to convince Radhakrishnan that it was indeed his former colleague in dire need of help. Without further hesitation, he transferred the money via UPI to an account provided by the scammer.

Minutes later, the scammer called again, asking for additional funds for hospital expenses. This time, something felt off. The urgency in the caller’s voice raised suspicions. Radhakrishnan lied, saying his account had insufficient balance. Then, he called Venu Kumar directly using the number he had saved in his phone. To his shock, Venu Kumar said he had never contacted him nor requested money.

Realizing he had been scammed, Radhakrishnan reported the incident to the National Cyber Cell, which forwarded the case to Kerala police.

The Investigation

Kerala police acted swiftly, tracing the transferred funds to an RBL Bank account in Maharashtra. The phone number used for the scam was linked to Gujarat, but the true mastermind remained elusive.

The suspect, Kaushal Shah, was eventually identified. A 41-year-old with a history of gambling addiction, Shah came from a financially stable family but had been borrowing money to support his habit. After cutting ties with his family in 2018, he allegedly turned to cyber fraud.

Investigators discovered that Shah had meticulously researched his targets. He gathered personal details from social media and other sources, tailoring his scams to specific victims. He used advanced AI-driven deepfake technology to clone voices and generate realistic video as well.

Shah operated alone, frequently changing phone numbers and using multiple bank accounts to avoid detection. The police registered a case under IPC Section 420 (cheating) and sections 66(c) and 66(D) of the IT Act, 2000. While they managed to freeze the fraudulent bank account, efforts amplified to recover Radhakrishnan’s lost money.

As AI advances globally, cybercriminals are finding new ways to exploit trust and manipulate unsuspecting victims in India.

How to Stay Safe:

- Verify Directly: If someone asks for money, always confirm by calling their saved number or meeting them in person.

- Be Sceptical of Video Calls: Deepfake technology can create realistic videos. However, at a closer look, you will spot something amiss. Look for unnatural blinking, lip sync issues, or other visual inconsistencies.

- Limit Personal Information Online: Scammers use publicly available data to personalize their fraud attempts. Avoid sharing personal details on social media.

- Enable Two-Factor Authentication (2FA): Strengthen security on financial and social media accounts to prevent unauthorized access.

- Report Suspicious Activity: If you suspect fraud, report it to cybercrime authorities immediately.

The Kerala scam shows how believably AI can be used to undermine trust. This is not just about one victim; it is a wake-up call for all of us. Technology can be a force for good or a weapon for mass deception if it is not managed responsibly. Radhakrishnan’s story is at least a reminder that we are all vulnerable, which is our reason to be aware, vigilant, and sceptical of suspicious digital interactions.

Common Scams

Common Scams